There’s been a fair amount of buzz in the IT world about IT-Business alignment lately. The complaints seem to be that IT seems to produce solutions that are simply too expensive. Solutions seem to range from “Agile” methodologies to dissolving the contemporary IT group into the rest of the enterprise.

I think there’s another piece that the industry is failing to fully explore.

I think what I’ve observed is that the most expensive part of application development is actually the communications overhead. It seems to me that the number one reason for bad apps, delays, and outright project failures, is firmly grounded in communications issues. Getting it “right” is always expensive. (Getting it wrong is dramatically worse.) In the current IT industry, getting it right typically means teaching analysts, technical writers, developers, QA, and help desk significant aspects of the problem domain, along with all the underlying technologies they need to know.

In the early days of “application development”, software based applications were most often developed by savvy business users with tools like Lotus 1-2-3. The really savvy types dug in on dBase. We all know why this didn’t work, and the ultimate response was client-server technology. Unfortunately, the client-server application development methodologies also entrenched this broad knowledge sharing requirement.

So how do you smooth out this wrinkle? I believe Business Analytics, SOA/BPM, Semantic web, portals/portlets… they’re providing hints.

There have been a few times in my career where I was asked to provide rather nebulous functionality to customers. Specificially, I can think of two early client-server projects where the users wanted to be able to query a database in uncertain terms of their problem domain. In both of these cases, I built application UI’s that allowed the user to express query details in easy, domain-specific terms. User expressions were translated dynamically by software into SQL. All of the technical jargon was hidden away from the user. I was even able to allow users to save favorite queries, and share them with co-workers. They enabled the users to look at all their information in ways that no one, not even I, had considered before hand. The apps worked without giving up the advances of client-server technology, and without forcing the user into technical learning curves. These projects were both delivered on time and budget. As importantly, they were considered great successes.

In more recent times, certain trends that have caught my attention: the popularity of BI (especially cube analysis), and portal/portlets. Of all the other tools/technologies out there, these tools are actively demanded by business end-users. At the same time, classic software application development seems to be in relatively reduced demand.

Pulling it all together, it seems like the IT departments have tied business innovation into the rigors of client-server software application development. By doing this, all the communications overhead that goes with doing it right are implied.

It seems like we need a new abstraction on top of software… a layer that splits technology out of the problem domain, allowing business users to develop their own applications.

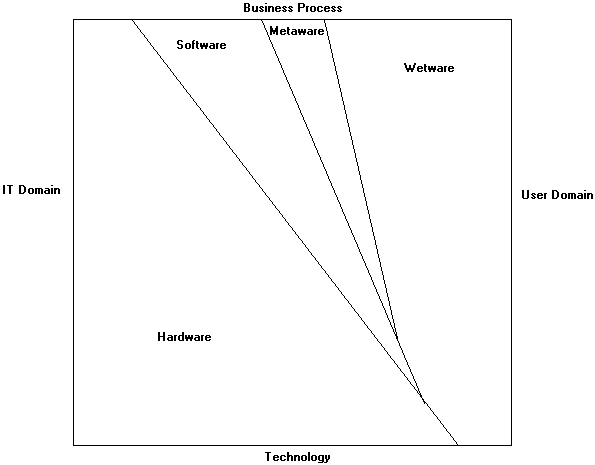

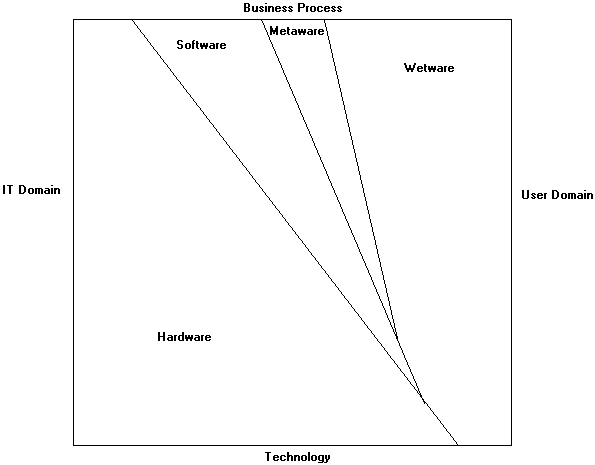

I’ve hijacked the word “metaware” as a way of thinking about the edge between business users as process actors (wetware) and software. Of course, it’s derived from metadata application concepts. At first, it seems hard to grasp, but the more I use it, the more it seems to make sense to me.

Here is how I approach the term…

As I’ve mentioned in the past, I think of people’s roles in business systems as “wetware“. Wikipedia has an entry for wetware that describes its use in various domains. Wetware is great at problem solving.

Why don’t we implement all solutions using wetware?

It’s not fast, reliable, or consistent enough for modern business needs. Frankly, wetware doesn’t scale well.

Hardware, of course, is easy to grasp… it’s the physical machine. It tends to be responsible for physical storage and high-speed signal transmission, as well as providing the calculation iron, and general processing brains for the software. It’s lightening fast, and extremely reliable. Hardware is perfect in the physical world… if you intend to produce physical products, you need hardware. Hardware applications extends all the way out to wetware, typically in the form of human interfaces. (The term HID tends to neglect output such as displays. I think that’s an oversight… just because monitors don’t connect to USB ports doesn’t mean they’re not human interface devices.)

Why do we not use hardware to implement all solutions?

Because hardware is very expensive to manipulate, and takes lots of specialized tools and engineering know how to implement relatively small details. Turnaround time on changes makes it impractical in risk-management aspects for general purpose / business application development.

Software in the contemporary sense is also easy to grasp. It is most often thought to provide a layer on top of a general purpose hardware platform to integrate hardware and create applications with semantics in a particular domain. Software is also used to smooth out differences between hardware components and even other software components. It even smooths over differences in wetware by making localization, configuration, and personalization easier. Software is the concept that enabled the modern computing era.

When is software the wrong choice for an application?

Application software becomes a problem when it doesn’t respect separation of concerns between integration points. The most critical “integration point” abstraction that gets flubbed is between business process and the underlying technology. Typically, general purpose application development tools are still too technical for user domain developers, and so quite a bit of communications overhead is required even for small changes. This communications overhead is becomes expensive, and complicated by generally difficult deployment issues. While significant efforts have been made to reduce the communications overhead, these tend to attempt to eliminate artifacts that are necessary for the continued maintenance and development of the system.

Enter metaware. Metaware is similar in nature to software. It runs entirely on a software-supported client-server platform. Most software engineers would think of it as process owners’ expressions interpreted dynamically by software. It’s the culmination of SOA/BPM… for example BPMN (Notation) that is then rendered as a business application by an enterprise software system.

While some might dismiss the idea of metaware as buzz, it suggests important changes to the way IT departments might write software. Respecting the metaware layer will affect the way I design software in the future. Further, respecting metaware concepts suggests important changes in the relationship between IT and the rest of the enterprise.

Ultimately it cuts costs in application development by restoring separation of concerns… IT focuses on building and managing technology to enable business users to express their artifacts in a technologically safe framework. Business users can then get back to innovating without IT in their hair.